# Physical environment configuration

This section outlines the steps to be performed to configure the physical environment used in this solution.

# Cabling the HPE Synergy 12000 Frames and HPE Virtual Connect 40Gb SE F8 Modules for HPE Synergy

This section shows the physical cabling between frames, Virtual Connect modules, and solution switching. It is intended to provide an understanding of how the infrastructure was interconnected during the testing and to serve as a guide on which the installation user can base their configuration.

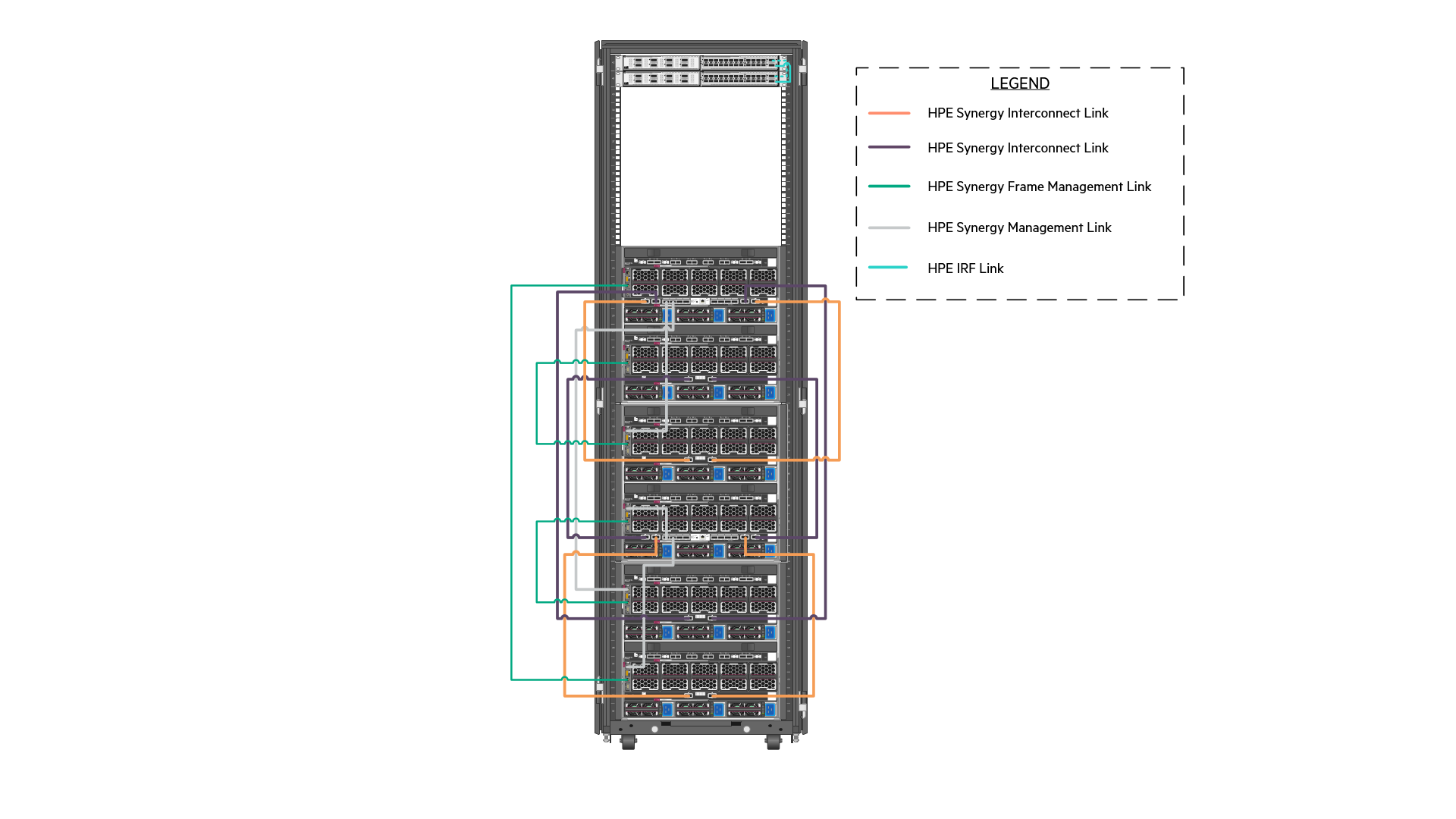

Figure 5 shows the cabling of the HPE Synergy Interconnects, HPE Synergy Frame Management, and Intelligent Resilient Fabric (IRF) connections. These connections handle east-west network communication as well as management traffic within the solution.

Figure 5. Cabling of the management and inter-frame communication links within the solution

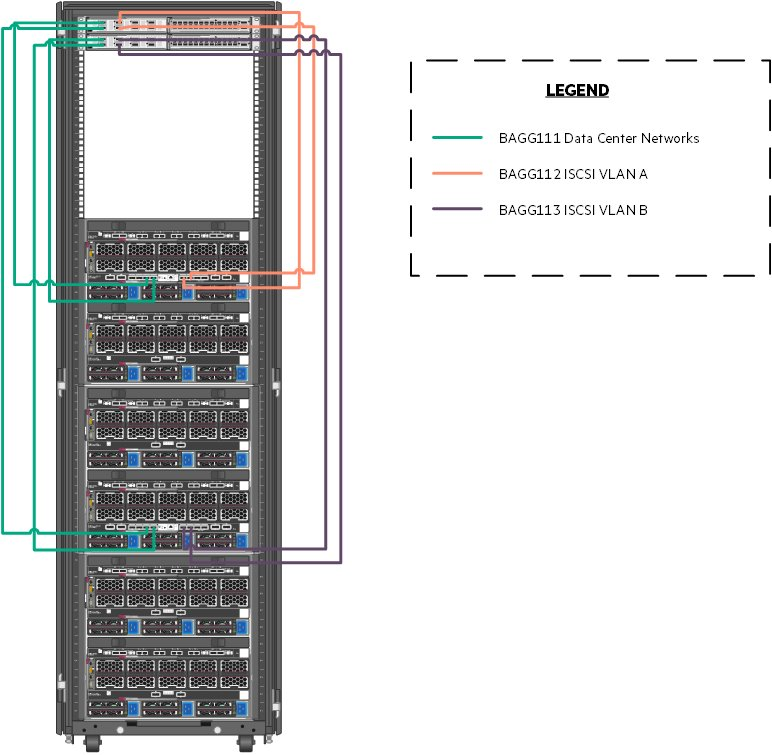

Figure 6 shows the cabling of HPE Synergy Frames to the network switches. The specific network contained within the Bridge-Aggregation Groups (BAGG) are described in detail, later in this document. At the lowest level, there are four (4) 40GbE connections dedicated to carry redundant, production network traffic to the first layer switch where it is further distributed.

Figure 6. Cabling of the HPE Synergy Interconnects to the HPE FlexFabric 5945 switches

Table 5 explains the cabling of the Virtual Connect interconnect modules to the HPE FlexFabric 5945 switching.

Table 5. Network used in this solution.

| Uplink Set | Synergy Source | Switch Destination |

|---|---|---|

| Network | Enclosure 1 Port Q3 | FortyGigE1/1/1 |

| Enclosure 1 Port Q4 | FortyGigE2/1/1 | |

| Enclosure 2 Port Q3 | FortyGigE1/1/2 | |

| Enclosure 2 Port Q4 | FortyGigE2/1/2 | |

| iSCSI_A_network | Enclosure 1 Port Q5 | FortyGigE1/1/5 |

| Enclosure 1 Port Q6 | FortyGigE1/1/6 | |

| iSCSI_B_network | Enclosure 2 Port Q5 | FortyGigE2/1/5 |

| Enclosure 2 Port Q6 | FortyGigE2/1/6 |

# Configuring the solution switching

The solution described in this document utilized HPE FlexFabric 5945 switches. The HPE FlexFabric 5945 switches are configured according to the configuration parameters found later in this section. Individual port configuration is also described in this section. The switches should be configured with an HPE Intelligent Resilient Framework (IRF). To understand the process of configuring IRF, refer to the HPE FlexFabric 5945 Switch Series Installation Guide at https://support.hpe.com/hpsc/doc/public/display?sp4ts.oid=null&docLocale=en_US&docId=emr_na-c05212026. This guide may also be used to understand the initial installation of switching, creation of user accounts, and access methods. The remainder of this section is built with the assumption that the switch has been installed, configured for IRF, hardened, and is accessible over SSH.

NOTE

The installation user might choose to utilize end of row switching to reduce switch and port counts in the context of the solution. If you follow end of row switching as the approach, then this section should be used as a guidance on how to route network traffic outside of the HPE Synergy Frames.

# Physical cabling

Table 6 represents mapping of source ports to ports on the HPE FlexFabric 5945 switches.

Table 6. HPE FlexFabric 5945 port map

| Source Port | Switch Port |

|---|---|

| Nimble Management Port Eth1 | TenGigE1/2/17 |

| Nimble Controller A TG1 | TenGigE1/2/13 |

| Nimble Controller A TG2 | TenGigE2/2/13 |

| Nimble Controller B TG1 | TenGigE1/2/14 |

| Nimble Controller B TG1 | TenGigE2/2/14 |

| Nimble Replication Port Eth2 | TenGigE1/2/15 |

| Virtual Connect Frame U30, Q3 | FortyGigE1/1/1 |

| Virtual Connect Frame U30, Q4 | FortyGigE2/1/1 |

| Virtual Connect Frame U30, Q5 | FortyGigE1/1/5 |

| Virtual Connect Frame U30, Q6 | FortyGigE1/1/6 |

| Virtual Connect Frame U40, Q3 | FortyGigE1/1/2 |

| Virtual Connect Frame U40, Q4 | FortyGigE2/1/2 |

| Virtual Connect Frame U40, Q5 | FortyGigE2/1/5 |

| Virtual Connect Frame U40, Q6 | FortyGigE2/1/6 |

| To Upstream Switching | Customer Choice |

Hewlett Packard Enterprise recommends that the installation user logs on to the switch, post-configuration and provide a description for each of these ports.

# Network definition

There are multiple networks defined at the switch layer in this solution:

Management Network -- This network facilitates the management of hardware and software interfaced by IT.

Data Center Network -- This network carries traffic from the overlay network used by the pods to external consumers of pod deployed services.

iSCSI A Network -- This network facilitates iSCSI storage connectivity.

iSCSI B Network -- This network facilitates iSCSI storage connectivity.

Table 7 defines the VLANs configured using HPE Synergy Composer in the creation of this solution. This network should be defined at both the first layer switch and within the composer. This solution utilizes unique VLANs for the data center and solution management segments. Actual VLANs and network count will be determined by the requirements of your production environment.

Table 7. Networks used in this solution.

| Network Function | VLAN Number | Bridge Aggregation Group |

|---|---|---|

| Solution_Management | 1193 | 111 |

| Data_Center | 2193 | 111 |

| iSCSI_vLAN_A | 3193 | 112 |

| iSCSI_ vLAN_B | 3194 | 113 |

# Configure VLAN

This section details the steps required to configure a VLAN.

- To add these networks to the switch, logon to the switch console over SSH and run the following commands.

> sys > vlan 1193 2193 3193 3194

- For each of these VLANs, perform the following steps.

> interface vlan-interface #### > name VLAN Name per table above > description Add text that describes the purpose of the VLAN > quit

NOTE

Hewlett Packard Enterprise strongly recommends configuring a dummy VLAN on the switches and assign unused ports to that VLAN.

- The switches should be configured with a bridge aggregation group (BAGG) for the different links to the HPE Synergy Frame connections. To configure the BAGG and ports as described in Table 5, run the following commands.

> interface Bridge-Aggregation111 > link-aggregation mode dynamic > description <FrameNameU30>-ICM > quit > interface range name <FrameNameU30>-ICM interface Bridge-Aggregation111 > quit > interface range FortyGigE 1/1/1 to FortyGigE 1/1/2 FortyGigE 2/1/1 to FortyGigE 2/1/2 > port link-aggregation group 111 > quit > interface range name <FrameNameU30>-ICM > port link-type trunk > undo port trunk permit vlan 1 > port trunk permit vlan 1193 2193 > quit

- For the VLANs that will carry iSCSI traffic, run the following commands.

> interface Bridge-Aggregation112 > link-aggregation mode dynamic > description <FrameNameU30>-ICM 3 > quit > interface range FortyGigE 1/1/5 to FortyGigE 1/1/6 > port link-aggregation group 112 > quit > interface Bridge-Aggregation 112 > port link-type trunk > undo port trunk permit vlan 1 > port trunk permit vlan 3193 > quit > interface Bridge-Aggregation113 > link-aggregation mode dynamic > description <FrameNameU30>-ICM 6 > quit > interface range FortyGigE 2/1/5 to FortyGigE 2/1/6 > port link-aggregation group 113 > quit > interface Bridge-Aggregation 113 > port link-type trunk > undo port trunk permit vlan 1 > port trunk permit vlan 3194 > quit

- Assign the network ports that will connect the various HPE Nimble Storage interfaces with the switches.

> interface range TenGigE 1/2/13 to TenGigE 1/2/14 > port access vlan 3193 > quit > interface range TenGigE 2/2/13 to TenGigE 2/2/14 > port access vlan 3194 > quit

- Place the HPE Nimble Storage management interface into the management VLAN.

> interface TenGigE 1/2/17 > port access vlan 1193 > quit

- Place the replication traffic for the VLAN into the default VLAN or a VLAN of your choice.

> interface TenGigE 1/2/15 > port access vlan 1 > quit

- After the configuration of the switches is complete, save the state and apply it by typing save and follow the resulting prompts.

# HPE Synergy 480 Gen10 Compute Modules

This section describes the connectivity of the HPE Synergy 480 Gen10 Compute Modules used in the creation of this solution. The HPE Synergy 480 Gen10 Compute Modules, regardless of function, were all configured identically. Table 8 describes the host configuration tested for this solution. Server configuration should be based on customer needs, and the configuration used in the creation of this solution might not align with the requirements of any given production implementation.

Table 8. Host configuration

| Component | Quantity |

|---|---|

| HPE Synergy 480/660 Gen10 Intel Xeon-Gold 6130 (2.1GHz/16-core/125W) FIO Processor Kit | 2 per server |

| HPE 8GB (1x 8GB) Single Rank x8 DDR4-2666 CAS-19-19 Registered Smart Memory Kit | 20 per server |

| HPE 16GB (1x 16GB) Single Rank x4 DDR4-2666 CAS-19-19 Registered Smart Memory Kit | 4 per server |

| HPE Synergy 3820C 10/20Gb Converged Network Adapter | 1 per server |

| HPE Smart Array P204i-c SR Gen10 12G SAS controller | 1 per server |

| HPE 1.92TB SATA 6GB Mixed Use SFF (2.5in) 3yr Warranty Digitally Signed Firmware SSD | 2 per management host |

# HPE Synergy Composer 2

At the core of the management of the HPE Synergy environment is HPE Synergy Composer 2. A pair of HPE Synergy Composers are deployed across frames to provide redundant management of the environment for both initial deployment and changes over the lifecycle of the solution. HPE Synergy Composer 2 is used to configure the environment prior to the deployment of the operating systems and applications.

This section guides the installation user through the process of installing and configuring the HPE Synergy Composer.

# Configure the HPE Synergy Composer via VNC

To configure HPE Synergy Composer with the user laptop, follow these steps:

Configure the laptop Ethernet port to the IP address 192.168.10.2/24. No gateway is required.

Use a CAT5e cable to connect the computer Ethernet port to laptop port on the front panel module of HPE Synergy Composer.

Use a web browser to access the HPE Synergy console. Start a new browser session and enter http://192.168.10.1:5800.

Click Connect to start HPE OneView for Synergy from the HPE Synergy console.

Click Hardware Setup to connect with Installation Technician user privileges.

In the Appliance Network dialog box, fill in the following information.

a. Appliance host name: Enter a fully qualified name of the HPE Synergy Composer.

b. Address assignment: Manual

c. IP address: Enter an IP address on the management network.

d. Subnet mask or CIDR: Enter the subnet mask of the management network.

e. Gateway address: Enter the gateway for the management network.

f. Maintenance IP address 1: Enter a primary maintenance IP address on the management network.

g. Maintenance IP address 2: Enter a secondary maintenance IP address on the management network.

h. Preferred DNS server: Enter the DNS server.

i. IPv6 Address assignment: Unassign

Click OK.

When the hardware discovery process is complete, all HPE Synergy hardware including the Frames, Composer modules, Frame Link modules, Interconnect modules, Compute modules, and storage modules must be discovered and claimed by HPE OneView for Synergy.

Review and correct any issues listed in the hardware setup checklist. The HPE Synergy 12000 Frame Setup and Installation Guide is available at https://psnow.ext.hpe.com/doc/c05348240 provides resolution for troubleshooting steps of common issues during hardware setup.

Navigate to OneView -> Settings and then click Appliance. Verify that the active and standby appliances show a status of Connected.

# Configure appliance credentials

Login to HPE OneView for Synergy Web Administration portal. Review and accept the License Agreement.

On the HPE OneView Support Dialog box, verify that Authorized Service has a setting of Enabled. Click OK.

Login as Administrator with default password admin. Set the new password to <<composer_administrator_password>> and click Ok.

# Configure solution firmware

This solution adheres to the firmware recipe specified with the HPE Converged Solutions 750 specification which can be found at CS750 Firmware and Software Compatibility Matrix. The solution used the latest firmware recipe available as of March of 2020 including HPE OneView for Synergy 5.0.

HPE Synergy Firmware is regulated according to a given HPE Synergy software release, which includes the software version of HPE OneView for Synergy on HPE Synergy Composer and the HPE Synergy Custom SPP firmware bundle for all other HPE Synergy components. At this stage, update the HPE Synergy Composer. The guided setup takes care of the firmware updates to the remaining HPE Synergy components.

# Solution configuration

The installation user should utilize the Synergy guided setup to complete the following solution configuration details. Perform all the steps of guided setup that are relevant to the environment. Skip steps that are irrelevant to your setup.

On the upper right-hand corner, click Guided setup -> First step, to begin the Guided setup.

Select the appropriate check boxes based on your solution under the Customize OneView Experience heading.

Click << to see the list of recommended appliance configuration steps based on the previous selection.

Proceed through the Guided setup process following the recommendations outlined in the subsequent sections. Place a check mark in the complete box for each step that is successfully completed.

# Create additional users

Hewlett Packard Enterprise recommends that you create a read-only user and an administrator account with a different username other than administrator.

# Create an IP pool on the management network

Follow the guided setup to create an IP pool on the management network. This IP pool will provide IP addresses to management IPs, and HPE device iLOs within the solution. Ensure that the pool is enabled before proceeding.

# Configure Ethernet networks

As explained in the Network definition section of this document, the solution utilizes four (4) network segments. Refer to the Create network section of the OneView Guided Setup wizard to define the network as shown in Table 9. Your VLAN values will generally differ. Table 9 describes the network defined within HPE Synergy Composer for this solution.

Table 9. Network defined within HPE Synergy Composer for this solution

| Network Name | VLAN Number | Purpose |

|---|---|---|

| Management | Ethernet | 1193 |

| Data center | Ethernet | 2193 |

| iSCSI_SAN_A | Ethernet | 3193 |

| iSCSI_SAN_B | Ethernet | 3194 |

The management network should be associated with the management network IP pool that is specified by the user. The installation user should create any additional required network for the solution.

# Create Logical Interconnect Groups

Within Composer, use the Guided Setup to create a Logical Interconnect Group (LIG) with three (3) uplink sets defined. For this solution, the uplink sets are named Network, iSCSI_SAN_A, and iSCSI_SAN_B. The uplink sets "Network" carries all other network defined for the solution. The iSCSI uplink sets carry the respective iSCSI VLANs. Table 5 defines the ports used to carry the uplink sets.

# Create Enclosure Group

From the OneView Guided Setup, select Create enclosure group.

Provide a name and enter the number of frames.

Select Use address pool and utilize the management pool defined earlier.

Use the Logical Interconnect Group from the previous section in the creation of the Enclosure Group.

Select Create when ready.

# Create Logical Enclosure

Use the Guided Setup to create a logical enclosure to make use of all three (3) enclosures. Select the firmware you uploaded earlier as a baseline. It can take some time for the firmware to update across the solution stack. Select Actions and then Update Firmware to ensure that the firmware complies with the baseline. Click Cancel to exit.

# Solution storage

HPE Nimble Storage AF40 is used to provide persistent, block storage in this solution. The storage volume is provided from the HPE Nimble Storage array for Docker data to host the repository, to store container images, and also to provide persistent volume for applications.

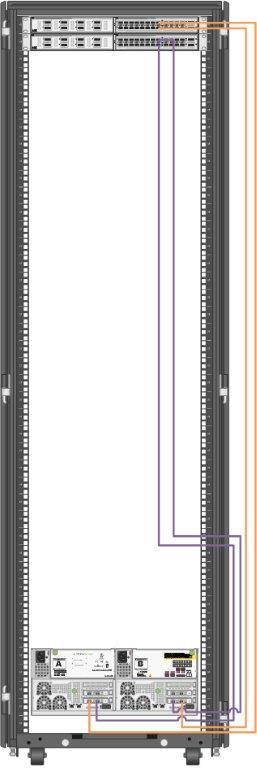

Figure 7 shows the cabling of the HPE Nimble Storage AF40 to the HPE switching utilized in this solution. Note that this diagram shows the storage and switching in the same rack to provide clarity. The switching resided in the HPE Synergy rack as implemented in this solution. The orange and purple wires in Figure 7 represent the separate iSCSI VLANs. This figure represents two HPE Nimble Storage arrays that were implemented to provide replication. In a real-world implementation, these arrays will be in separate physical locations (two separate data centers, separate buildings, separate sections of the same data center) to maximize redundancy and minimize failure points.

Figure 7. Cabling of the HPE Nimble Storage arrays to the HPE FF 5945 switches

NOTE

HPE Nimble Storage AF40 is also connected to the same top of rack switch mentioned in section Configuring the solution switching of this document.

# Configuring solution storage

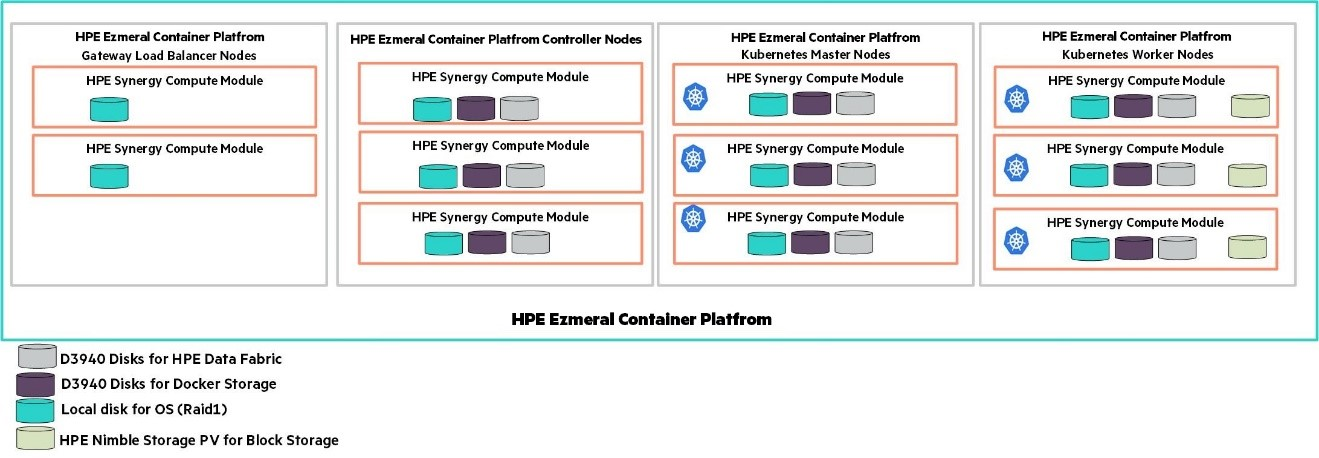

The HPE Synergy D3940 Storage Module provides SSDs consumed for Node Storage. HPE Ezmeral Data Fabric is a distributed file and object store from MapR that manages both structured and unstructured data. It provides the persistent volume for the container workload.

Figure 8 describes the logical storage layout used in the solution. The HPE Synergy D3940 Storage Module provides SAS volume and JBOD. In this solution, JBOD is used.

HPE Nimble Storage AF40 is used to provide persistent, block storage in this solution. The storage volume is provided from the HPE Nimble Storage array for Docker data, to host the repository to store container images, and also to provide persistent volumes for applications.

Figure 8. Logical storage layout within the solution

Table 10 lists all volume used within the solution and highlights what storage provides the capacity and performance of each function.

Table 10. Volume used in this solution

| Volume/Disk Function | Qty | Size | Source | Hosts | Shared/Dedicated |

|---|---|---|---|---|---|

| Node Storage | 3 | 960GB | HPE Synergy D3940 storage | HPE Ezmeral Container Platform controller nodes | dedicated |

| HPE Ezmeral Data Fabric | 3 | 960GB | HPE Synergy D3940 storage | HPE Ezmeral Container Platform shadow controller nodes | dedicated |

| Node Storage | 6 | 960GB | HPE Synergy D3940 storage | HPE Ezmeral Container Platform compute node | dedicated |

| HPE Ezmeral Data Fabric | 6 | 960GB | HPE Synergy D3940 storage | HPE Ezmeral Container Platform compute node | dedicated |

| Operating System | 11 | 2 x 400GB configured as RAID 1 | Synergy Local Storage | All nodes | dedicated |

The HPE Nimble Storage AF40 is used for mapping to the HPE FF 5945 switching as described in Table 11. The replication storage would be considered in the same fashion, but generally on separate switching in a separate physical location. All switch ports are configured as access ports. So, the VLANs are untagged (see Configuring the solution switching). The redundant array is implemented in a separate location with the same physical configuration.

Table 11. HPE Nimble Storage AF40 port mapping

| HPE Nimble Storage AF40 Port | VLAN Number | Switch Port |

|---|---|---|

| Management Port Eth1, Controller A | 1193 | TenGigE1/2/17 |

| Management Port Eth1, Controller B | 1193 | TenGigE2/2/17 |

| Controller A TG1 | 3193 | TenGigE1/2/13 |

| Controller A TG2 | 3194 | TenGigE2/2/13 |

| Controller B TG1 | 3193 | TenGigE1/2/14 |

| Controller B TG2 | 3194 | TenGigE2/2/14 |

| Replication Port Eth2 | Default | TenGigE1/2/15 |

NOTE

The scripts and reference files provided with this document are included as examples on how to build the solution and they are not supported by Hewlett Packard Enterprise. It is expected that the scripts and reference files will be modified to meet the requirements of the deployment environment by the user prior to installation.